tl;dr

We analyzed all Hacker News posts with extra than 5 comments between January 2020 and June 2023.

Leveraging LLama3 70B LLM, we examined each the posts and their linked comments to realize insights

into how the Hacker News neighborhood engages with assorted matters. You might presumably maybe also procure the datasets

we produced on the bottom of the article.

Employ the tool beneath to detect varied matters and the emotions they evoke. The sentiment column

experiences the median sentiment rating, 0 being basically the most negative sentiment and 9 basically the most sure.

Click on the column headers to inquire matters that encourage stable reactions, whether sure or

negative, and to title divisive matters that have a tendency to generate polarized commentary.

Motivation

Whereas you happen to might presumably maybe also had been following Hacker News for a while, you might well presumably maybe even obtain doubtless developed an instinct

for the matters that the neighborhood loves – and matters the neighborhood loves to dislike.

Whereas you happen to port Factorio to urge on ZX Spectrum using Rust, you’re going to be overwhelmed

with love and karma. On the opposite hand, you better don an asbestos suit if, as an alternative

of raising a funding round, you promote your startup to a private equity company

in teach that they’ll add telemetry within the codebase to energy centered adverts.

But that’s proper a hazy instinct! For the explanation that neighborhood is a sizable believer in rationality

and information science (the phrase is divisive even though), we would be in a stronger living

if we might presumably maybe also support our hunch with apt information analysis.

Furthermore, it might well presumably maybe give us an excuse to play with stout language devices which, the general

AI hype apart, are thoughts-blowingly efficient at functional initiatives fancy this. And,

we happen to be developers of an originate source tool,

Metaflow, written in Python,

which makes it stress-free and academic to hack initiatives fancy this.

Sort the bolded phrases within the textbox above to inquire if we are optimizing for an

appropriate emotional response.

What matters model on Hacker News?

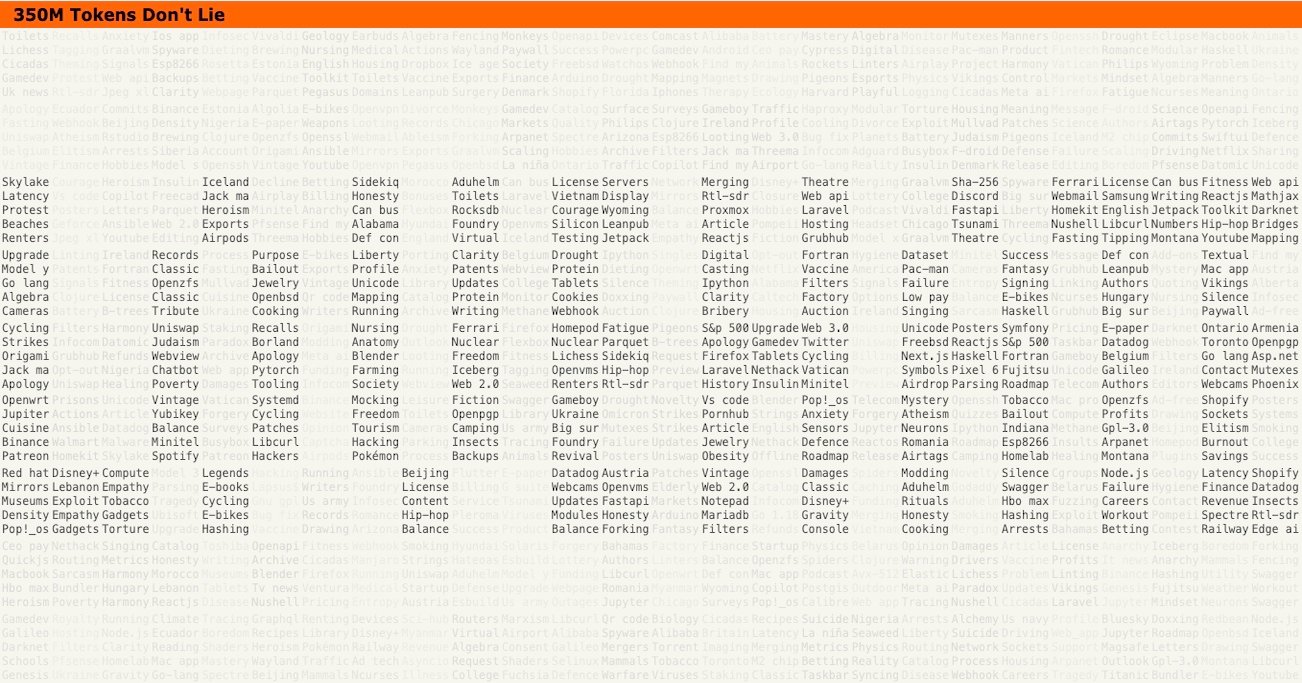

As described within the implementation allotment beneath, we downloaded about 100,000 pages posted

on Hacker News to design shut what vow resonates with the neighborhood. We focal level on posts

that won on the least 20 upvotes and 5 comments, prompting a stout language model to come support

up with ten phrases that easiest say each page.

Listed below are the cease 20 matters, aggregated from the 100,000 pages, alongside with the series of

posts holding each topic:

The head matters are rarely fine. On the opposite hand, the neighborhood is identified for having

diverse intellectual interests. The head 20 matters say finest 10% of the matters

lined all via posts. You might presumably maybe also inquire this by your self by utilizing the tool above that

veil the general 14,000 matters which can presumably maybe be linked to on the least 5 posts.

Delight in the cease matters evolved over time? You guess. Listed below are the cease-10 trending

matters:

Even supposing the dataset finest extends to June 2023, we can inquire a tsunami

of AI, pure language processing, and linked matters. Sadly, layoffs started

trending mid-2022 as properly. The upward thrust of AI articles doubtless explains the growth

within the Skills topic as properly.

What’s declining

In 2020-2022, an incredible macro-model became as soon as all the pieces linked

to the COVID pandemic which fortunately is no longer a pressing danger

anymore. Fascinatingly, August 2021 became as soon as a tumultuous month with specifically

Apple’s proposed CSAM

scanning

causing an uproar in posts linked to Privateness and Apple.

Whereas you happen to is also searching to design shut a paddle down the memory lane, design shut into consideration the past

matters on the left which have not been lined as soon as since January 2022:

| Sooner than Jan 2022 📉 | After Jan 2022 📈 |

|---|---|

| George Floyd | GPT-4 |

| Herd immunity | Stable Diffusion |

| Antibodies | Russia-Ukraine War |

| IOS 14 | Ventura |

| Freenode | Financial institution Failure |

| Suez Canal | Midjourney |

| Wallstreetbets | Hiring Freeze |

| Hydroxychloroquine | FIDO Alliance |

| An infection charges | Mark of living crisis |

Correspondingly, the matters on the apt did now not exist earlier than

January 2022. It would now not design shut a PhD in Economics to know the system

Wallstreetbets got changed by Mark of living crisis,

Hiring freeze, and Financial institution failure.

But how attain folks in actuality feel about these matters

Importantly, sharing or upvoting a post would now not point out endorsement – usually the opposite. Hence

to actually understand the dynamics of a neighborhood, we prefer to investigate how folks react to posts, as expressed

via their comments.

To attain this, we reconstructed comment threads linked to the posts within the dataset and requested

an LLM to classify the sentiment of the discourse between 0 and 9, zero being an all-out flamewar

and 9 indicating concord and positivity.

Our dutiful LLM took the job as a digital neighborhood moderator and went via 100k comment threads in

about 9 hours, reading via 230M phrases consisting of the pudgy emotional gamut of bitterness,

passion, wisdom, humor, and love.

Right here’s what the LLM got here support with in phrases of the distribution of sentiments:

Initially, the LLM is totally befuddled about what a neutral dialogue looks to be fancy – there are no 5 within the implications.

Or, presumably here is a snarky demonstrate by the LLM noting that folks are incapable of unemotional, honest discourse.

Secondly, the emotions are clearly skewed

in direction of the sure facet, which aligns with our private journey with the internet page. The bias to

positivity and optimism is a key purpose why we originate Hacker News day-to-day. There’s sufficient vitriol in completely different places.

It is also value noting that on the least about a of the few ones assigned by the machine seem to be bogus.

As an illustration, this post by BentoML became as soon as given a rating of one,

even supposing the sentiment looks majorly sure and supportive. The total popular caveats about LLMs narrate.

Now with the matters and the sentiment rankings at hand, we can lastly give a scientific solution to the inquire

what the neighborhood loves and loves to dislike:

| Delight in 😍 | Detest 😠 |

|---|---|

| Programming | FTX |

| Computer Science | Police Misconduct |

| Launch Offer | Sam Bankman-Fried |

| Python | Xinjiang |

| Recreation Construction | Torture |

| Rust | Worker Monitoring |

| Electronics | Mark Cutting |

| Mathematics | Racial Profiling |

| Realistic Programming | On-line Security Invoice |

| Programming Language | War on Terror |

| Physics | Atlassian |

| Embedded Programs | CSAM |

| Self Improvement | NYPD |

| Database | Alameda Analysis |

| Unix | Global College students |

| Astronomy | TSA |

| Retro Computing | Construct It Act |

| Nostalgia | Vehicle Functions |

| Debugging | Bloatware |

Geeks, nerds, and hackers need to aloof gain themselves apt at home! Interestingly, while the neighborhood tends to be

visionary and forward-searching (with technical matters on the least), it positively has a soft hassle for (technical) nostalgia.

Whereas the in style world is terribly perfect, we omit ZX Spectrum, Z80 assembly, and 8086 dearly. No lower than we gain some

comfort in PICO-8.

On the anger-inducing facet, most matters need no clarification. It is value clarifying even though that Hacker News would now not

dislike Global College students, nonetheless the posts linked to them are usually overwhelmingly negative,

reflecting the neighborhood’s sympathy for the challenges faced by those finding out in a foreign country.

Comically, Hacker News is no longer a neighborhood of car followers. After we talk about vehicles, that is because there might be

one thing spoiled with them. For added insights fancy this, exercise the tool on the cease of the page to detect

the varied panorama of HN matters intimately.

Some matters are proper divisive

Moreover matters being unimodally love or dislike-inducing, some matters are bimodal: Infrequently

a post in regards to the discipline generates a highly sure response, other instances a flamewar. Examples consist of

- GNOME – KDE vs. GNOME – the battle has been raging for 25 years.

- Google – a dominant pressure within the Web, each in apt and unpleasant.

- Authorities regulations – damned whilst you attain and damned whilst you do now not.

- Challenge capital – the lifeblood of Silicon Valley and a source of unending gossip.

Stare extra by sorting by the divisive column within the tool above. To contemptible highly by the divisiveness

rating, the discipline need to aloof be linked to each negative and scurry posts equally

and no longer many neutral ones.

Is the mood bettering?

We are able to diagram the moderate sentiment over time, as expressed in day-to-day comment threads:

The tips proves that issues sucked in August 2021 (or presumably it became as soon as proper the Apple debacle highlighted

within the chart above). General, there might be a particular nonetheless modest downward model within the moderate sentiment.

It

would require a deeper analysis to design shut why here is the case. Striking apart an obvious hypothesis

that lifestyles is proper getting worse (which will no longer be proper),

one more hypothesis would be a variant of

Eternal September. It takes conscious and tireless

effort to defend a particular mood in a rising neighborhood. Kudos to HN moderators for keeping the

neighborhood thriving and scurry over time!

Implementation

A purpose to be mad and optimistic in regards to the future is the very existence of this text.

Whereas pure language processing and sentiment analysis had been around for a protracted time,

the quality, versatility, and the convenience of exercise afforded by LLMs is in actuality extraordinary.

Reaching the quality

of matters and sentiment rankings with a messy dataset fancy the one here would obtain required a PhD-thesis

stage of effort proper about a years within the past – and extremely doubtless the implications would had been worse. In disagreement,

we developed the general code for this text in about seven hours. Processing 350M tokens with proper

a decent-sized model would obtain required a supercomputer a decade within the past, whereas in our case it took about 16 hours

using broadly accessible hardware.

Most amazingly, the general constructing blocks, LLMs incorporated, will doubtless be found in in originate source! Let’s attain a

rapidly overview (with code and information), exhibiting the system you might well presumably maybe even repeat the experiment at home.

Right here’s what we did on the high stage:

Every white box within the characterize is a Metaflow drag alongside with the roam, linked beneath:

HNSentimentInitcreates a checklist of posts to investigate.HNSentimentCrawldownloads the posts.HNSentimentAnalyzePostsparses the posts and runs them via an LLM.HNSentimentCommentDatareconstructs comment threads in accordance to a Hacker News dataset in Google BigQuery. We need to aloof/might presumably maybe also

obtain mild this within the step (1) too. Next time!HNSentimentAnalyzeCommentsruns the comment threads via an LLM.- Recordsdata analyses and the charts shown above are produced in notebooks (the grey box within the characterize).

Right here’s what the flows attain:

Donwloading posts

First, we wished to investigate matters lined by Hacker News posts which obtain

generated some dialogue. The exercise of a publicly

accessible dataset of HN posts

(thanks Julien!), we queried all posts between

January 2020 and June 2023 (basically the most up-to-date date accessible in this dataset) which had at

least 20 upvotes and extra than 5 comments, which resulted in about 100,000 posts.

Right here’s the simple Metaflow drag alongside with the roam

that did the job, extraordinary thanks to DuckDB.

For the explanation that 100,000 posts are totally on assorted domains, we can safely procure

them in parallel with out DDOS’ing the servers. It took finest around 25 minutes to

procure the pages with 100 parallel staff

(inquire here how).

Huge-scale file working out with LLMs

Parsing the text vow from random HTML pages mild to be a extensive PITA, nonetheless

BeautifulSoup makes it superbly

easy.

At this level, we obtain a somewhat natty dataset of about 100,000 text documents with

extra than 500M tokens in total. Processing the dataset as soon as with a cutting-edge LLM,

suppose, GPT-4o, or Llama3 70b on AWS Bedrock would designate around $1,300 (using ChatGPT batch API),

no longer counting the output tokens. Moreover the designate, time is a danger – we are searching to job

the dataset as like a flash as seemingly, so we can overview the implications rapidly, and rinse-and-repeat

if essential. Hence we do now not are searching to win rate-restricted or otherwise bottlenecked by the APIs.

We now obtain posted beforehand about our

success with NVIDIA NIM microservices that

present a rapidly rising library of LLMs and other GenAI devices as prepackaged photos,

fully optimized to design shut advantage of vLLM, TensorRT-LLM and the Triton Inference Server,

so that you just might well presumably maybe no longer need to utilize time chasing basically the most up-to-date tricks with LLM

inference.

Since we had NIMs incorporated in our Outerbounds deployment,

we proper added @nim to our drag alongside with the roam

to win access to a high-throughput LLM endpoint that costs finest as extraordinary as the auto-scaling GPUs

it runs on, in this case, four H100 GPUs. Naturally you might well presumably maybe even hit an LLM endpoint of your

selecting – the decisions are many within the interim.

Prompting an LLM to assemble a checklist of matters for each post

Our instantaneous is easy:

Assign 10 tags that best describe the following article.

Reply only the tags in the following format:

1. first tag

2. second tag

N. Nth tag

---

[First 5000 tokens from a web page]

The llama3 70b model we mild has a 8,000 token context window, nonetheless we made up our minds to

restrict the series of tokens to 5,000 to sage for differences within the tokenizer habits,

making obvious that we do now not drag past the restrict.

Processing about 140M inputs tokens in this form took about 9 hours. We were ready to

amplify throughput to around 4,300 enter tokens per second by hitting the model concurrently

with 5 staff, as neatly shown in our UI beneath, to design shut advantage of dynamic batching

and other optimizations.

As one more of searching to procure 100,000 comment pages directly from Hacker News, we

leveraged a Hacker News dataset in Google

BigQuery.

Annoyingly, comments within the database are circuitously linked to their guardian

post, so we had to put in pressure a little feature to reconstruct the comment

threads.

We are able to no longer urge the feature with BigQuery directly nonetheless luckily we can export the guidelines with out bid

in Parquet information. Loading the following 16M rows in DuckDB and scanning via them

became as soon as a trip, aided by the truth that Metaflow knows the suitable solution to load information

like a flash. We proper

added @resources(disk=10000, cpu=8, memory=32000) to urge the feature on a stout sufficient instance.

Prompting an LLM to investigate sentiment

With the comment threads at hand, we were ready to urge them via our

LLM with this instantaneous:

In the scale between 0-10 where 0 is the most negative sentiment

and 10 is the most positive sentiment, rank the following discussion.

Reply in this format:

SENTIMENT X

where X is the sentiment rating

---

[First 3000 tokens from a comment thread]

Getting a straightforward structured output fancy this looks to work with out points. We processed

via some 230M enter tokens in this form, which took finest about 7 hours as we

essential finest two output tokens.

You might presumably maybe also reproduce the general steps above using your favourite toolchain. Listed below are

key reasons why we mild Metaflow and why you might well presumably maybe are also searching to design shut into consideration it too:

Staying organized with out effort – a sizable abet in comparison with random Python

scripts or notebooks is that Metaflow persists all artifacts robotically, tracks all

executions, and keeps all the pieces organized. We relied on Metaflow’s

namespaces to device stout and costly

runs alongside prototypes, colorful that the 2 can no longer intrude with one one more. We

mild Metaflow tags

to put together information sharing between flows while they were being developed independently.Easy cloud scaling – crawling required horizontal

scaling,

DuckDB required vertical scaling,

and LLMs required a GPU backend.

Metaflow handled the general conditions out of the box.Highly accessible orchestrator – working a stout dataset via an LLM can designate

hundreds of greenbacks. You assign no longer need the urge to fail due to the random points.

We relied on a highly accessible Argo Workflows

orchestrator that Metaflow

helps out of the box to defend the urge working for hours.

You might presumably maybe also attain the general above using originate-source Metaflow, nonetheless we had about a

additional advantages by working the flows on Outerbounds Platform:

It is merely stress-free to originate code fancy this, alongside with notebooks, with VSCode working on

cloud workstations,

scaling to the cloud is soft sailing,

and @nim allowed us to hit LLMs with out

being concerned about designate or rate limiting.

If facets fancy this sound relevant to your interests, we are pleased

to win you started for free.

Dive deeper at home

There’s extraordinary extra that will doubtless be analyzed and visualized with this dataset. As one more of spending

about a thousand greenbacks hitting OpenAI APIs, you might well presumably maybe even

procure the matters and sentiments we created:

post-sentiment.jsonincorporates a mappingpost_id -> sentiment_scorepost-topics.jsonincorporates a mappingpost_id -> [topics]topics-data.jsonincorporates a cleaned and joined dataset in accordance to the above JSONs,

powering the tool on the cease of this text.

You might presumably maybe also gain metadata linked to post IDs in this HuggingFace

dataset and within the

Google BigQuery Hacker News

dataset.

To gape posts, merely originate

https://information.ycombinator.com/merchandise?identification=[post_id].

As an illustration, it’d be spirited to look for into the correlation between post domains and

sentiments and matters. Our hunch is that particular domains assemble predominantly sure

sentiments and vice versa. Or, attain divisive matters garner extra parts?

Whereas you happen to assemble one thing stress-free with this information, please link support to this weblog article and enable us to

know! Be half of Metaflow Slack and fall a demonstrate on #ask-metaflow.

To give a design shut to our originate-source efforts, please give Metaflow a indispensable person! 🤗